Run the LOAD command multiple times to load all data from OSS to the MaxCompute tables that you created, and execute the SELECT statement to query and verify the imported data.For more information, see STS authorization. Create a RAM role that has the OSS access permissions and assign the RAM role to the RAM user.If you want to configure the project to use the MaxCompute V2.0 data types and the Decimal 2.0 data type, add the following commands at the beginning of the CREATE TABLE statements: ) In this example, the project uses the MaxCompute V2.0 data types because the TPC-H dataset uses the MaxCompute V2.0 data types and the Decimal 2.0 data type. We can unload Redshift data to S3 in Parquet format directly.

#Redshift unload to s3 parquet how to

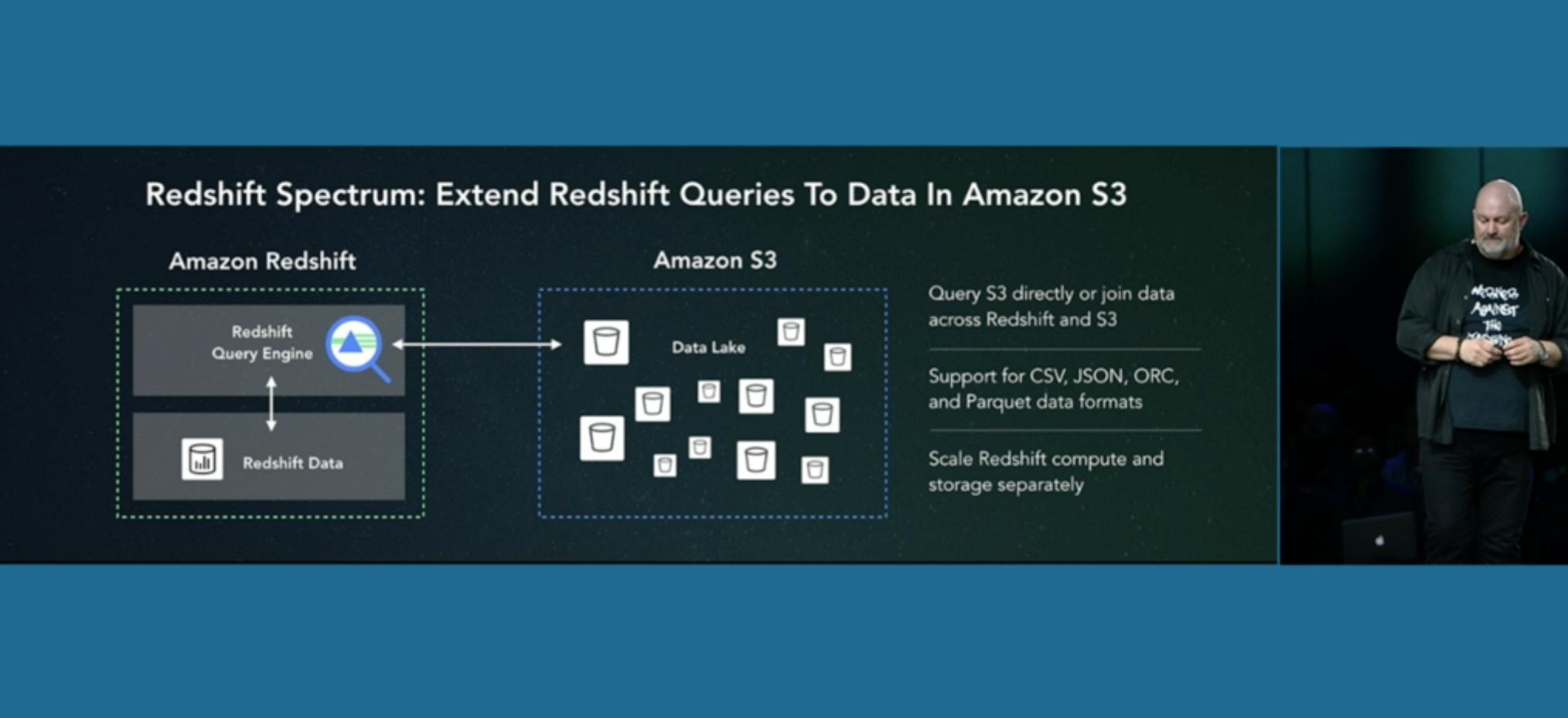

The following sample commands show how to create tables: 2 Answers Sorted by: 4 Spark is not needed anymore. Use the ad-hoc query feature to execute SQL statements (optional). Right-click the exported folder and select Get total size to obtain the total size of the folder and the number of files in the folder.įor more information about ad hoc queries, see.On the Amazon Web Services (AWS) platform, create an IAM user who uses the programmatic access method to access Amazon S3.In the Account Management section of the Overview page, click the link under RAM user logon, and use the RAM user to log on to the Alibaba Cloud Management Console. In the left-side navigation pane, click Overview.The AliyunMGWFullAccess policy authorizes the RAM user to perform online migration jobs. The AliyunOSSFullAccess policy authorizes the RAM user to read data from and write data to OSS buckets. On the page that appears, select AliyunOSSFullAccess and AliyunMGWFullAccess, and click OK. Find the RAM user that you created, and click Add Permissions in the Actions column.For more information, see Create a RAM user. Log on to the RAM console and create a RAM user.Create a Resource Access Management (RAM) user and grant relevant permissions to the RAM user.we click on it and it will open the IAM role page. We go to our cluster in the redshift panel, we click on properties, and then we will see the link to the iam role attached to the cluster. TO 's3://bucket_name/unload_from_redshift/supplier_parquet/supplier_' The first thing we need to do is to modify our redshift cluster iam role to allow write to s3. TO 's3://bucket_name/unload_from_redshift/region_parquet/region_' TO 's3://bucket_name/unload_from_redshift/partsupp_parquet/partsupp_' TO 's3://bucket_name/unload_from_redshift/part_parquet/part_' TO 's3://bucket_name/unload_from_redshift/nation_parquet/nation_' TO 's3://bucket_name/unload_from_redshift/lineitem_parquet/lineitem_' TO 's3://bucket_name/unload_from_redshift/orders_parquet/orders_' The meta key contains a contentlength key with a value that is the actual size of the file in bytes. IAM_ROLE 'arn:aws:iam::xxxx:role/redshift_s3_role' For example, the following UNLOAD manifest includes a meta key that is required for an Amazon Redshift Spectrum external table and for loading data files in an ORC or Parquet file format. TO 's3://bucket_name/unload_from_redshift/customer_parquet/customer_' The following sample command shows how to unload data from Amazon Redshift to Amazon S3:

0 kommentar(er)

0 kommentar(er)